Codethink is a software company that works on various client projects; ranging from medical, finance, automotive. In these different areas of engagement; we are trusted to work on various types of problems that clients face with their systems. One such problem encountered required us looking at a userspace software where the program was occasionally not responding on time. This proved to be a challenging problem, due to the fact that the problem is not seen on every cycle or every try. This is how we used Tracecompass to solve that problem.

After initial debugging, we were fairly convinced that this problem was caused by process scheduling, and that the process was not being allocated CPU on time. The scheduler is a component of the kernel that decides which runnable thread will be executed by the CPU next. Since this problem is now a suspected problem from the scheduler we decided to look into how the different threads are getting scheduled. There are many tools to look into Linux kernel internals, the most basic of them is ftrace. This can be used to trace functions or kernel events through hundreds of static event points that are placed inside the kernel code at various places. There are also tools which have used ftrace and extended its functionality even more and one such tool is LTTng. LTTng has been designed to provide a low overhead tracing on production systems. The tracers achieve this great performance through a combination of essential techniques such as per-CPU buffering, RCU data structures, a compact and efficient binary trace format, and more. LTTng disturbs the traced system as little as possible in order to make tracing of subtle race conditions and rare interrupt cascades possible.

Installing LTTng was a fairly straight forward,two step process:

- Add LTTng module to the kernel.

- Build and install the userspace component.

Once LTTng was working on our client hardware, we started looking for different tools that can analyse the trace and present it in a user-friendly form. Lots of viewers are available like:

Babeltrace can be used to convert the generated trace to text format or write custom analysis with python. Looking at all the available options and evaluating them against our task, we decided to go for tracecompass, which is an Open Source application to solve performance and reliability issues by reading and analysing traces and logs of a system. Its goal is to provide views, graphs, metrics, and more to help the user to extract useful information from traces. This is done in a way that is more user-friendly and informative than producing huge text dumps.

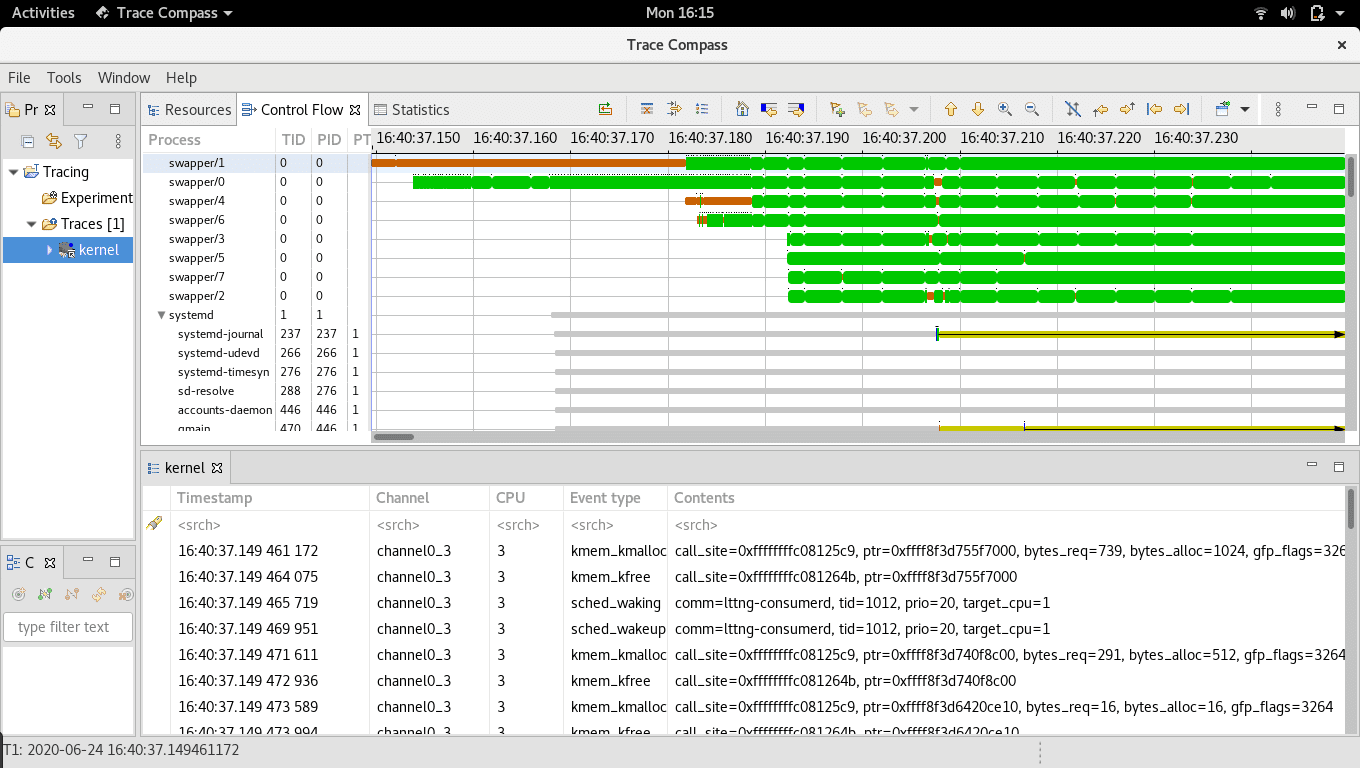

After a decision on the project tools was made, we started setting up the client system for an auto-test where a script will start an LTTng session, trigger the steps needed to reproduce the problem and save the logs. This is left to run overnight, before checking the logs next morning. Then we run another script on it to find the particular cycle where the problem was reproduced (if it was). After a few days of testing we have collected some cycles of logs with the problem, and we started looking at the LTTng trace using tracecompass. Our log collection script had been collecting top logs also and we got the pid and tid from that log which we will need to trace in tracecompass. Tracecompass will then display the data in the form of graphs which are easy to visualize. An example trace is:

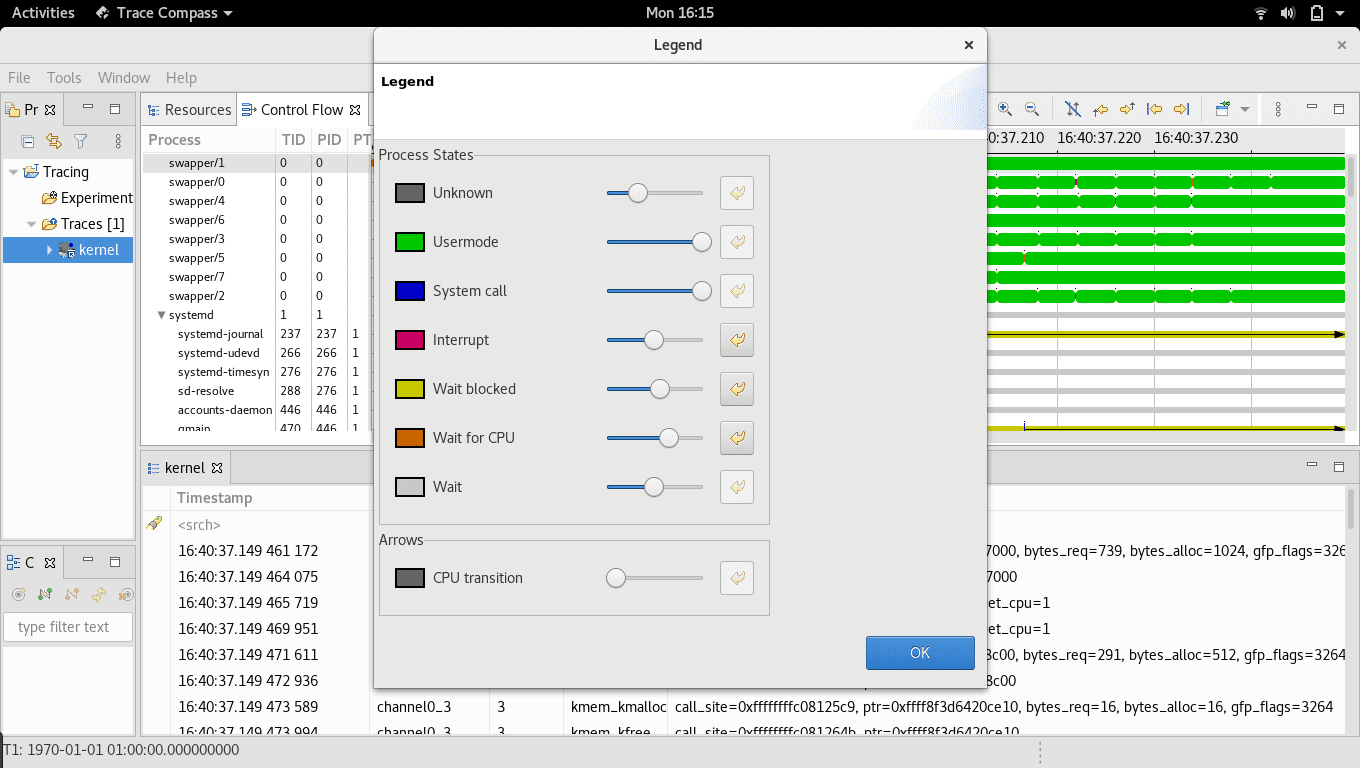

The colour codes used by the graphs are also defined.

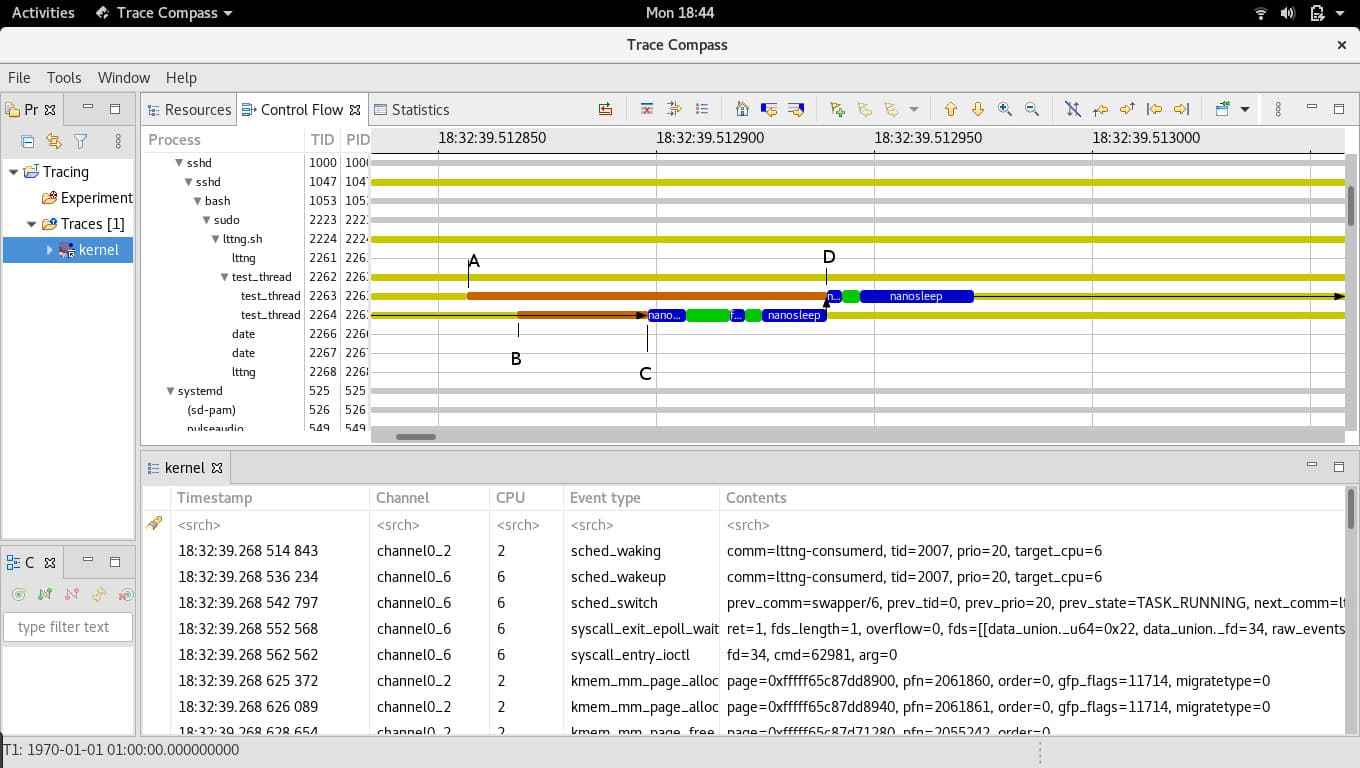

After going through the logs we found the problem and it was indeed because of a thread getting blocked due to the CPU being unavailable. We can not show the client log here, but we recreated the original problem by tweaking the process priorities which can show a similar trace in tracecompass.

The test_thread process with tid: 2263 and tid: 2264 are our test threads. At point A, thread tid:2263 started waiting for CPU, at point B thread tid: 2264 started wait and they both were in “Wait for CPU” mode till point C. At that point the scheduler decided to allot the CPU to tid:2264 even though 2263 had been waiting for more time (from A). The thread tid:2263 got CPU at point D when tid:2264 finished. It was easy to suggest a fix once we knew what the problem was, and the suggested solution was again tested in our auto-test setup to confirm that it actually works before delivering to the client.

Other Content

- Philip Martin, Meet the Team

- Improving systemd’s integration testing infrastructure (part 1)

- A new way to develop on Linux

- RISC-V Summit Europe 2024

- Safety Frontier: A Retrospective on ELISA

- Codethink sponsors Outreachy

- The Linux kernel is a CNA - so what?

- GNOME OS + systemd-sysupdate

- Codethink has achieved ISO 9001:2015 accreditation

- Outreachy internship: Improving end-to-end testing for GNOME

- Lessons learnt from building a distributed system in Rust

- FOSDEM 2024

- Introducing Web UI QAnvas and new features of Quality Assurance Daemon

- Outreachy: Supporting the open source community through mentorship programmes

- Using Git LFS and fast-import together

- Testing in a Box: Streamlining Embedded Systems Testing

- SDV Europe: What Codethink has planned

- How do Hardware Security Modules impact the automotive sector? The final blog in a three part discussion

- How do Hardware Security Modules impact the automotive sector? Part two of a three part discussion

- How do Hardware Security Modules impact the automotive sector? Part one of a three part discussion

- Automated Kernel Testing on RISC-V Hardware

- Automated end-to-end testing for Android Automotive on Hardware

- GUADEC 2023

- Embedded Open Source Summit 2023

- RISC-V: Exploring a Bug in Stack Unwinding

- Adding RISC-V Vector Cryptography Extension support to QEMU

- Introducing Our New Open-Source Tool: Quality Assurance Daemon

- Long Term Maintainability

- FOSDEM 2023

- Think before you Pip

- BuildStream 2.0 is here, just in time for the holidays!

- A Valuable & Comprehensive Firmware Code Review by Codethink

- GNOME OS & Atomic Upgrades on the PinePhone

- Flathub-Codethink Collaboration

- Codethink proudly sponsors GUADEC 2022

- Tracking Down an Obscure Reproducibility Bug in glibc

- Web app test automation with `cdt`

- FOSDEM Testing and Automation talk

- Protecting your project from dependency access problems

- Porting GNOME OS to Microchip's PolarFire Icicle Kit

- YAML Schemas: Validating Data without Writing Code

- Deterministic Construction Service

- Codethink becomes a Microchip Design Partner

- Hamsa: Using an NVIDIA Jetson Development Kit to create a fully open-source Robot Nano Hand

- Using STPA with software-intensive systems

- Codethink achieves ISO 26262 ASIL D Tool Certification

- RISC-V: running GNOME OS on SiFive hardware for the first time

- Automated Linux kernel testing

- Native compilation on Arm servers is so much faster now

- Higher quality of FOSS: How we are helping GNOME to improve their test pipeline

- Full archive