In late 2022, Codethink and exida showed that almost 90% of C (and C++) safety-related bugs were mitigated in some fashion by switching to Rust. We argued that the safety community must justify its continued use of C or C++ if not switch to Rust. Three years on, let's revisit what was said, and look at what has changed.

Is Rust ready for safety related applications?

You can download the slides and then the below will make a little more sense. The below is a reconstruction of content, which was delivered in person, from my slide notes, since unfortunately the video of the presentation no longer appears to be available. The reconstruction is written looking back at the deck, so if you happen to have been present at the symposium and remember the talk, just bear that in mind.

Abstract

As of October 2022, it was getting increasingly difficult to ignore the impact and capabilities of Rust. Rust brings a number of advantages, as a language, to the world of safety related application software. At the time, a commercially supported compiler was already available with support for several devices used in automotive projects.

Our goal on the day was to talk a little about Rust, and to hopefully convince the delegates to consider using it in their safety applications. We proposed to discuss a little about options around linking to existing C libraries, and porting code from C to Rust; as well as exploring the stated advantages of Rust as compared with C, and to not shy away from some of the new difficulties that might have arisen for programmers starting new projects in Rust or converting existing codebases.

Finally we explained that we hoped to give the delegates a basis on which they could start to construct an argument around using Rust, either alone or in conjunction with C, in their safety applications.

What do we do with C today

In order to make C suitable for use in safety applications we had to surround ourselves with large amounts of rules, cross-checks, guidelines, and tooling. There's the MISRA rules, AUTOSAR, if you get to C++, even more MISRA, AUTOSAR, and even JSF rules. Then there were tool qualification suites, including exida's ctools suite; coding guidelines, static analysis, etc. All of these tools were wrappered up with architecture guidelines, application specific tooling etc.

All of these things were, or are, valuable, and all of them address, in some part, things which the language is unable to help you with.

But this is, I'm sure you'll agree, a lot to deal with as we consider writing our arguments for using C in a safety context.

Background to Rust

Rust 1.0 was in late 2015, and it has been considered 'stable' in a backward-compatibility sense since then. The language's capabilities grow year on year, through a process called RFCs and MCPs, and though the rate of change is relatively high, the Rust project's commitment to compatibility tempers that somewhat.

Target support improves rapidly, once the compiler can build for something, library ecosystems catch up very quickly. Often wrappering C libraries until native Rust ones are written, and at the time it was evident that the Linux kernel was moving toward allowing Rust drivers to exist in-kernel.

The ecosystem is well documented with a number of 'books' around the core ecosystem, and every published library having documentation available in one place (docs.rs). The "official" language specification was the Rust compiler codebase itself; however there were efforts to write properly standardisable specifications, and indeed recently Ferrous systems donated their specification to the Rust foundation.

Contribution to the ecosystem, and getting support, was (and remains) similar to typical open source projects - there are community forums, Github or other code forges, and some consultancies offering Rust specific help. Governance was mostly handled by small teams within the community, though there is a Rust foundation tasked with stewarding the ecosystem and the language.

Core Rust

At the time, change to Rust was typically managed via the RFC or MCP processes where discussion is had to design things. Changes were then made in a way that is feature-flagged so that normal users are not affected by them. After changes were considered good enough, there would be a stabilisation proposal and if that goes through, the change becomes live for all users. This process remains largely unchanged to today, though there are more nuances than we wanted to go into in the presentation slot. There has been a lot of effort put into helping with this process, including documents such as Proposing a change to the language written up by the Rust language design team.

Rust libraries (and some programs), which are called crates, are published to a central package repository called crates.io used by both people and tooling to acquire them. All crates uploaded to crates.io are automatically documented on docs.rs.

The Rust packaging and build tool cargo uses crates.io by default to acquire dependencies for projects (though this can be overridden for software supply-chain management reasons). Cargo then builds your dependencies and your whole project managing the process for you. Testing is also generally handled, at least initially, via Cargo and Rust's built in testing.

But as an additional benefit to crates.io - there is a Rust service called 'Crater' which can run the entire crates ecosystem through a proposed compiler change allowing changes to be verified against a near-complete corpus of open-source Rust code. This leads to even more confidence in the stability of the tooling and ecosystem.

What makes Rust special

The primary thing which draws people to Rust is the fact that the language forces developers to consider the lifetime of data and data access. This, and a few other aspects of Rust's design allows it to make claims about safety in the sense of certain aspects of memory /safety such as data races, use-after-free, etc. which can plague other languages. Rust calls this the 'borrow checker' and it's effectively part of the early stages of the compiler. Lifetimes are a part of the Rust type system, so Rust's expressive type system is there to help you to write correct code.

Because the authors of Rust's standard library are aware of all the places that there could be problems, due in no small part to the Rust language helping them, there are many ways in which the standard library helps developers to do the right thing. One aspect of that is that all functions which could do something dangerous are more likely to panic rather than do the dangerous thing (such as indexing out of the bounds of an array). Panics are a controlled mechanism for dealing with such behaviour at runtime. Better, of course, is the APIs in Rust which make explicit the fallibility of such operations, requiring that the author of the code deal with such problems.

Outside of the language and the standard library, there are a number of pieces of Rust's tooling which really help - for example Rust compiler error messages try and act like a pair programmer, doing their best to assist the developer in fixing their problems - as mentioned above, there are runtime protections such as controlled panics which developers can take advantage of, or control the existence of, at their pleasure; and there is an ecosystem-provided extra-linting tool called clippy which helps with spotting bad idioms and offering fixes to the developer.

What do beginners struggle with

Newcomers to Rust often face a number of struggles depending on their past experience (or lack thereof) in Software. Rust is essentially a procedural language, with some fundamental rules around data lifetime and access lifetimes, with a behavioural modelling abstraction which it calls Traits - this forces a change in thinking around software design since most traditional design processes are object-oriented.

Sometimes Rust is forced on newcomers because of existing projects, but in this space, typically people come to Rust because of the promises it makes about helping programmers to not create certain classes of bugs in their code. Rust is low-level enough that we should consider using it in almost any safety application where we'd be using C "by default" and high-level enough that it offers many benefits once the learning curve is surmounted.

Because Rust is a low-level systems language at its heart, its interoperability with C code is very good; however there are also tools out there which help with converting C code into Rust which can be used as a starting point to understand how to write software in Rust. But do not let this lead you to false confidence, even very low-level Rust code feels distinct from C code and will require your engineers to rethink their approaches.

Rust is one of the recent neo-languages (at the time of the presentation it was only 7 years old and has only recently celebrated its 10th birthday) and as such it has a significant amount of modern tooling around it. This can cause a lot of confusion in engineers used to a more traditional C, C++, CMake/GNU Make environment. The neo-languages were, at the time, not at the same maturity of ecosystem support as the old guard, and as such newcomers also struggle with understanding which library is best to use; or what devices are supported well, which can complicate any decision to use Rust in a project.

What else is hard in Rust

While the compiler refusing to build bad code is more helpful than simply dealing with a runtime canary, the shift in cognitive load is not in a direction developers are used to, which can cause difficulty as new engineers come to Rust. Rust trades a lot of the pain of debugging something when a bug becomes apparent, for the work required to express to the compiler that your design is sound. This is very much something which many engineers are not used to. The corollary to this point is that not all syntactically valid Rust is semantically correct and no matter how helpful a compiler is, you cannot be certain you have written the right thing. There is a middle part to the Rust learning curve where developers will tend to assume that because the compiler said something was OK, that it was in fact correct. Overcoming this overconfidence in the tooling and returning to a disciplined approach to requirements-driven testing can be hard for newcomers who are experiencing the power of the Rust language for the first time.

Perfectly valid Rust code may yet not be considered idiomatic - there is a linting tool called Clippy which can catch a number of errors in your code, but which also guides developers toward a style of coding which aims at reducing the cognitive load of comprehending code written by others. Learning to obey these tools can be difficult when, again, newcomers are faced with the power of Rust and old-guard programmers who are more set in their ways with design and even layout of code.

Understanding the runtime canaries can be a sticking point as people try to integrate Rust into their products - Rust's "panic" approach is a controlled unwinding of the program state which can be hooked into. It is possible to force your program to fail to build if panics are happening in certain areas of your code, and learning to code in a way which cannot induce panics is important, but it is a step along the way to Rust mastery which is quite far along the learning curve and can take quite a while to get used to. Ultimately though, no amount of compiler, linter, and ecosystem tooling, will help you to avoid systematic semantic errors in your code. Even with Rust around, your developers will still need the support of your requirements team and a comprehensive testing approach in order to make good progress.

Coexistence with other code (C)

At the time the talk was given, a significant effort had already been put into permitting Linux device drivers to be written in Rust and used in the Linux kernel - this was a huge step forward for Automotive developers who need to create device drivers for custom Automotive hardware and who want to take advantage of Rust's language benefits. This coexistence is made possible primarily because Rust is designed to be a systems programming language and as such has interoperability with C built in. The Kernel has significant numbers of very well designed interfaces which are explicit about lifetimes of objects etc. permitting reasonably simple, or at least comprehensible, bindings into the Rust world. - This interoperability is achieved by means of Rust's safe/unsafe dichotomy, and its FFI.

The Rust project talks about "safety" but it's not safety in the same sense as the delegates at the symposium would have thought of it. Rust makes a few very constrained claims about what it considers 'safe'. But because computers, and the real world, are not built around Rust's safety model, it still permits developers to express to the compiler that they have manually verified that the Rust safety guarantees are still met by the codebase. These two worlds, safe and unsafe, live together by means of very constrained boundaries at which the assumptions Rust makes can be asserted back to the compiler.

This is done by means of a keyword unsafe which does double-duty, marking both things which the compiler cannot be certain about on its own and will need human assistance to confirm rules are not broken, and also by marking the places where a human has done the work to constrain the "unsafety" and reestablish the constraints to permit the compiler to continue to make the assumptions it needs in order to support the developer properly.

With all of the above, developers can then use Rust's ability to reference symbols in C code (or export symbols with C linkage) by means of Rust's FFI. FFI is inherently unsafe, and so your developers will need to understand and appreciate all of the safety rules before engaging in this kind of development. The Rust ecosystem provides documentation around safe/unsafe and the FFI to help you to develop this expertise.

As you'd expect, the Rust ecosystem provides tooling to assist with managing all this, there's cargo geiger which is a tool to determine how much of, and what kind of, unsafe code exists in your software and its dependency tree. And there's cargo miri which can actually interpret the intermediate form of Rust within the compiler and can be used to check for undefined behaviour at runtime (typically via your test suite), allowing you to assert the correctness of the second class of unsafe keyword usage mentioned above.

Testing in Rust

Rust's main build tooling (Cargo) comes with mechanisms for testing built into it. Unit tests are written as annotated functions in your codebase directly which tightly couples unit tests and the code they cover. Integration tests are written in separate source files alongside your codebase, in a tests directory; and any examples in your code documentation are automatically extracted, compiled, and run as tests, ensuring that documentation examples remain functional as you develop your software. The Cargo manual covers most of how these work; and the rustdoc manual covers code documentation testing.

In addition to the built-in testing framework, there is a rich ecosystem of additional testing libraries such as frameworks for mocking out parts of your design, benchmarking time-critical code sections, or fuzzing your software to help to ensure there are no crashes associated with unexpected or corrupt inputs. Each of these has good documentation to help you to use them in your project.

There is also a lot of other tooling out there to help. I mentioned miri briefly before, and it is there to help you run your tests in such a way that any undefined behaviour can be found and addressed; and there's Tarpaulin, a Cargo addon which assists with producing code-coverage reports to show if your tests are covering as much code as they need to in order to be confident of the codebase. Once again, documentation is a big thing in the Rust ecosystem and so there's plenty there too.

Finally you need to be able to test the tooling itself. As you'd expect, there is an extensive test suite for the compiler and core tooling built into their repositories and you could run all those tests yourself if you build your own Rust toolchain. Despite that, initiatives such as Ferrocene offer even more confidence and qualification should you require it. Whatever means you use to acquire your toolchain we recommend that you establish a baseline for confidence in the tooling yourselves.

C2Rust conversion

C2Rust is a project supported by DARPA. The C2Rust tool helps by transpiling C99 compliant code into Rust. It does not produce idiomatic Rust, nor necessarily nice Rust, but it does the syntactic conversion so that you can focus on the semantics of the software.

The Github repository contains instructions for downloading, building, and installing it on Ubuntu Linux, but there is a demo on https://c2rust.com/ which you can use to try things out on smaller pieces of code.

In terms of trust, you have the same problem you would have with any other transpiler or code generator - you need to develop trust in the tool for yourself. C2Rust explicitly states that they expect a human being to be involved in dealing with its output - it's the first step along a path to switching to Rust, not a silver-bullet.

What the tool does is take some C code, and effectively consumes unsafe C code, and produces the equivalent unsafe Rust code. This code should then be refactored and reengineered by your Rust engineers until the unsafety is isolated into places where it is necessary and well audited, and the rest of the code is in the Safe Rust language.

Tool support

The Rust community (often referred to as rust-lang) provides, free of encumbrance, all the tooling required to get going with Rust. The licences in use in the community are usually Apache 2.0 or MIT/BSD terms which permit use in proprietary situations. In contrast, organisations such as Ferrocene offer Long Term Support, qualified compilers, and other ISO 26262 and ASIL related assistance and tooling. Though there are costs associated with those.

Ultimately there is a question of how can you develop some trust in the tooling, and the community and ecosystem are working toward providing those assurances, though those of you familiar with Ken Thompson's seminal essay "Reflections on trusting trust" will be aware that this is a long and painstaking process. As with any switch in your approach to software in your safety applications; choosing Rust brings with it some amount of risk. Mitigations for those risks will vary depending on your particular applications, however the basic design and goals of the Rust language and core ecosystem are already mitigations for any number of C related risks you already carry.

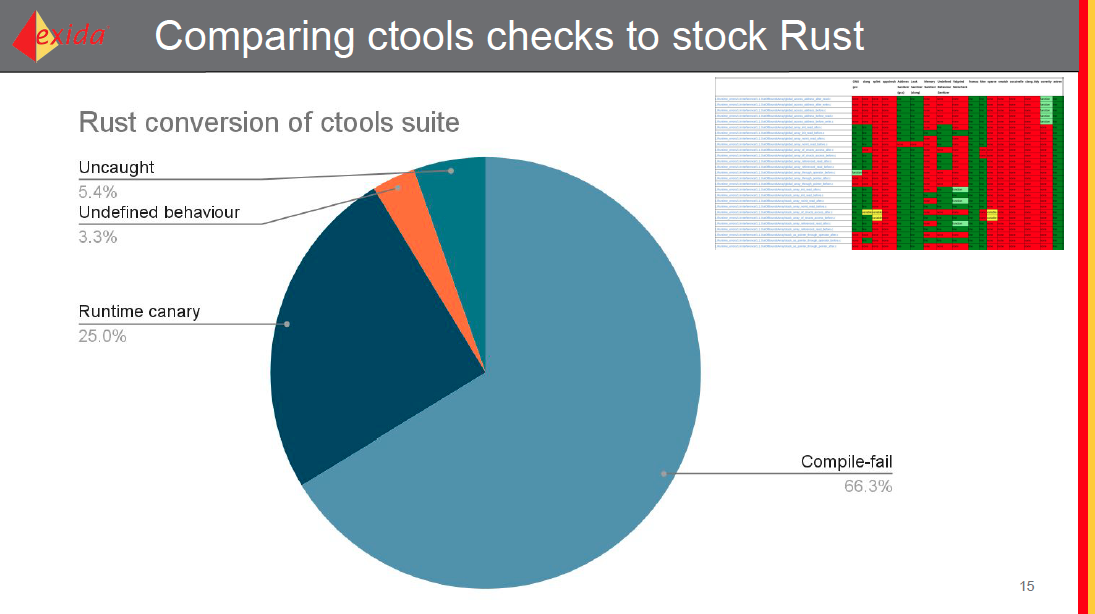

Comparing ctools checks to stock Rust

In preparation for this talk, we went through the exida ctools repository, and considered around a hundred of the C test cases. ctools was presented by Michał Szczepankiewicz / Krystian Radlak / Piotr Serwa / Lukas Bulwahn at the exida 2020 Symposium Session 14: Tool-based code verification for C and C++ in dependable projects – systematic approach. In red you can see a snapshot of the uncaught language insecurities using 17 different C language static analysis tools.

Making the assumption that we do not want to use unsafe, unless it's literally impossible to demonstrate how Rust helps without it, we set about seeing how a default Rust toolchain does with these cases.

- Rust, by construction, prevents many of the things checked for in the ctools suite such as use-after-free or leaked pointers. A full two thirds of the test cases are simply not compilable.

- At runtime, Rust's controlled panics would catch a further 25% of the tests. With the use of the

no-paniccrate, the majority of these would be convertible to compile-time failures. Which could bring the "not possible by construction" percentage up as high as 90% - Three of the tests in the suite explicitly work with copying memory around, to do this we would need unsafe, but failure to use the correct copying semantics would likely be flagged by using MIRI to do testing around undefined behaviour.

- There are a small number of things which Rust simply won't detect by default because they're defined clearly in things like the IEEE specification for floating point numbers. Further tooling work will likely be necessary to improve on this.

- In most of the compile-fail cases, Rust gives a useful error message explaining why a certain pattern is not acceptable in the language. Rust's error messages tend to lead the way in the compiler world these days.

Given the spread of outcomes, we would not expect this distribution to have changed over the past couple of years.

ISO 26262 part six and Rust

This slide assumes reasonably intimate knowledge of ISO 26262. Since that is a proprietary document I cannot include it here for reference, so I'll summarise briefly.

If we take tables one, three, six, and seven from ISO 26262 part six as examples, we can look at each item in those tables and characterise them in terms of the Rust ecosystem and language. Many of the items in these tables are realistically things that your design process, review process and other human-related activities cover. These are not coloured.

A significant number of the items are either covered by built-in features of the Rust language or core tooling, or are at least human activities which can be supported by the tooling. As we would expect, there were still some aspects of ISO 26262 which needed tooling to be developed, however these are few and some tooling is already underway.

Briefly, table one considers modelling and coding guidelines, and everywhere Rust touches directly is covered nicely. Table three considers software design principles and while most of it is for humans, Rust at worst merely gets out of the way of the principles in question, and at best actively assists in following the principles. Table six is about software unit design and implementation. Rust gets much more involved here and other than one unit design principle (the one about each subprogram/function only having a single entry and single exit) Rust assists wholeheartedly. (Interestingly, because Rust encourages the use of the ? operator to neatly propagate runtime errors through the type system, it actively works against 1a.) Table 7 talks about unit testing. Tooling around data flow and control flow analysis has been developed since the talk, one example of which is Flowistry which is available as a VSCode plugin.

Is there an argument to use Rust

Up until this point in the presentation we were posing the question as "What argument can we make for using Rust in safety applications?", particularly given the experience and long-history of using C, and the effort invested into standards such as MISRA.

However, we'd much rather turn the question on its head, and that that point we did.

Is there an argument for not using Rust

Let's instead ask "What is your argument for not using Rust?"

There are some fairly simple reasons, such as AutoSAR MCAL availability, target availability or support tier; a particular C library you need for which wrappering would be inappropriate; or simply what batteries you get or do not get in a Rust distribution.

But - think also about what you actually need, in a lot of cases there is a good argument for originating the support you require, and contributing it to the now growing community of Rust users in the safety world.

And consider this - is the cost of wrapping a C library, or even rewriting it into Rust, greater than the cost of the risks associated with still using C.

Finally ask yourselves:- "What is my argument for using C? How can I justify the risks and dangers of continuing to work in C when there are alternatives like Rust to consider?"

The idea that the delegates ought to be justifying their use of C in their safety argumentation was one which very few at the conference had even considered before this point.

References

Several of the links are simply integrated above, however the rest from the slide deck are:

- Programming Rust, Fast Safe Systems Development Blandy, Orendorff & Tindall O'Reilly 2nd Ed June 2021

- Ferrous Systems and Ferrocene

- The Rust programming language

- JSF / MISRA C rules

- MISRA Exemplar

- AUTOSAR rules

- MISRA

- Software metrics post from exida

In addition, at the time there had been a Rust in adaptive AutoSAR presentation at the Elisa summit.

How has the landscape changed in the past years?

In the past few years, the fundamentals of Rust have not changed dramatically. Ecosystem support for various aspects of the language has improved and there are many new tools (some of which were mentioned above) which can be used to address aspects of concern the safety community may have had before. However the main shift has been in the understanding of just how poor of an idea it is to continue with using C (and to a lesser extent C++) without careful consideration of the risks.

There have been advances in possible C replacements such as Zig and possible updates/changes to C++ such as Circle and CPP2 though the latter two are very much bandaids/wrappers around the issues in C++. The C++ committees around safety and the language have worked on things such as a Safe C++ paper and C++ safety profiles though these also feel a little /like an addon rather than the paradigm shift which Rust provides.

The risks of C/C++ memory unsafety in particular continue to be talked about in increasing amounts by a more and more diverse set of people. For example, Sternum wrote in 2024 about memory safety from the perspective of IoT, and Runsafe wrote about memory safety vulnerabilities. While both of those articles are particularly focussed on cybersecurity, the close relationship between safety and cybersecurity means that they are relevant to us in the safety context as well. Furthermore, the continued evolution of sites such as the ISRG's Memory safety site point to the software community embracing the idea of shifting to languages such as Rust more and more as time passes.

When we then look at the safety community itself, we can also see a shift toward Rust. The Eclipse Kuksa project has embraced Rust as part of Eclipse SDV. The [ELISA][elisa] project recently held a workshop where our own Paul Albertella presented on the topic of the role of Rust in safety critical applications along with Daniel Krippner of ETAS.

Finally we would be remiss not to consider the direct approaches being taken to utilise Rust in safety critical areas which are emerging. The ongoing work on Ferrocene along with the Rust Foundation's Safety Critical Rust Consortium are good examples; the latter of which publishes the https://arewesafetycriticalyet.org/ website, the most useful page of which at the moment is probably the lists of members of the coding guidelines and tooling subcommittees. In addition, last year a paper about Bringing Rust to Safety-Critical Systems in Space was published.

Would Codethink recommend using Rust in safety applications?

Given the mention above about our talking about Rust at an ELISA workshop, it might be reasonable to assume that Codethink believes that Rust is ready for safety applications. In no small sense, we do believe that to be the case, but with reservations such as those we expressed a few years ago -- Rust won't be right for everyone or for every situation yet. There remains a lot of work to be done to ensure that the language specification is complete, then further work on the standard library and other pieces of the ecosystem before every traditional safety scenario could be comfortable with Rust.

Some of that work we have been involved with, and the rest we keep an eye on with enthusiasm.

However, we are already actively using Rust in our own safety work. In February, at FOSDEM, we open-sourced and spoke about our Trustable software framework (which is now an Eclipse project) as well as open-sourcing our Safety Monitor project which is written in Rust. This was a precursor to our announcement of a baseline safety assessment of Codethink's Trustable Reproducible Linux OS. We firmly believe that using Rust in the sort of situation where, rather than fully traditional ISO 26262-style safety, you are on board with the Trustable approach to safety, is completely viable and indeed desirable.

There is a long way to go, but where in 2022 we asked the question "What is my argument for using C? How can I justify the risks and dangers of continuing to work in C when there are alternatives like Rust to consider?" we now feel that the better question is "What is my argument for not choosing Rust." and since, for us, the answer to that question is "I have no good argument not to".

We choose Rust.

Other Content

- FOSDEM 2026

- Building on STPA: How TSF and RAFIA can uncover misbehaviours in complex software integration

- Adding big‑endian support to CVA6 RISC‑V FPGA processor

- Bringing up a new distro for the CVA6 RISC‑V FPGA processor

- Externally verifying Linux deadline scheduling with reproducible embedded Rust

- Engineering Trust: Formulating Continuous Compliance for Open Source

- Why Renting Software Is a Dangerous Game

- Linux vs. QNX in Safety-Critical Systems: A Pragmatic View

- The open projects rethinking safety culture

- RISC-V Summit Europe 2025: What to Expect from Codethink

- Cyber Resilience Act (CRA): What You Need to Know

- Podcast: Embedded Insiders with John Ellis

- To boldly big-endian where no one has big-endianded before

- How Continuous Testing Helps OEMs Navigate UNECE R155/156

- Codethink’s Insights and Highlights from FOSDEM 2025

- CES 2025 Roundup: Codethink's Highlights from Las Vegas

- FOSDEM 2025: What to Expect from Codethink

- Codethink/Arm White Paper: Arm STLs at Runtime on Linux

- Speed Up Embedded Software Testing with QEMU

- Open Source Summit Europe (OSSEU) 2024

- Watch: Real-time Scheduling Fault Simulation

- Improving systemd’s integration testing infrastructure (part 2)

- Meet the Team: Laurence Urhegyi

- A new way to develop on Linux - Part II

- Shaping the future of GNOME: GUADEC 2024

- Developing a cryptographically secure bootloader for RISC-V in Rust

- Meet the Team: Philip Martin

- Improving systemd’s integration testing infrastructure (part 1)

- A new way to develop on Linux

- RISC-V Summit Europe 2024

- Safety Frontier: A Retrospective on ELISA

- Codethink sponsors Outreachy

- The Linux kernel is a CNA - so what?

- GNOME OS + systemd-sysupdate

- Codethink has achieved ISO 9001:2015 accreditation

- Outreachy internship: Improving end-to-end testing for GNOME

- Lessons learnt from building a distributed system in Rust

- FOSDEM 2024

- QAnvas and QAD: Streamlining UI Testing for Embedded Systems

- Outreachy: Supporting the open source community through mentorship programmes

- Using Git LFS and fast-import together

- Testing in a Box: Streamlining Embedded Systems Testing

- SDV Europe: What Codethink has planned

- How do Hardware Security Modules impact the automotive sector? The final blog in a three part discussion

- How do Hardware Security Modules impact the automotive sector? Part two of a three part discussion

- How do Hardware Security Modules impact the automotive sector? Part one of a three part discussion

- Automated Kernel Testing on RISC-V Hardware

- Automated end-to-end testing for Android Automotive on Hardware

- GUADEC 2023

- Embedded Open Source Summit 2023

- RISC-V: Exploring a Bug in Stack Unwinding

- Adding RISC-V Vector Cryptography Extension support to QEMU

- Introducing Our New Open-Source Tool: Quality Assurance Daemon

- Achieving Long-Term Maintainability with Open Source

- Full archive