Exploring how Codethink used embedded Rust to measure Linux’s scheduling performance with an external clock, while maintaining bit-for-bit reproducibility of the testing firmware.

Before choosing a Linux-based operating system on a multi-core processor for a safety-critical task, we must first be certain of its real-time capabilities. These can be demonstrated through repeated long-duration “soak” testing of a running system, but such results are less valuable if all timing measurements are referenced to the system’s own clock. To help demonstrate the real-time scheduling capabilities of Codethink’s Trustable Reproducible Linux-based Operating System (CTRL OS) we employed custom embedded Rust firmware on an external microcontroller, with its own clock, to monitor the timings of Linux processes. Moreover, as our Trustable Software Framework (TSF) stipulates, the Rust toolchain lets us compile that firmware reproducibly and in a controlled environment, just like any other testing tool.

The Linux kernel provides the SCHED_DEADLINE mode to allocate CPU time to threads with hard real-time constraints running on the same processor core1. SCHED_DEADLINE threads declare their mode and constraints to the kernel with the sched_setattr syscall, specifying 4 important parameters2:

- Period: how often the kernel should provide the thread with its runtime, in ns

- Deadline: the time in each period by which the thread must have finished its execution for that period, in ns

- Runtime: the thread’s expected worst-case execution time in each period, in ns

RECLAIMflag (optional): whether the kernel should provide the thread with unused CPU time in a period if the thread hadn’t finished its execution

Using the earliest deadline first algorithm, the kernel then balances CPU time between SCHED_DEADLINE threads such that each meets its own scheduling parameters. If it would be impossible to allocate CPU time to a new thread without violating its own or existing threads’ requirements, the kernel rejects the sched_setattr call. SCHED_DEADLINE thus provides a theoretical basis for Linux as a powerful, open-source, and real-time operating system family.

However, to put this into practice, and work towards certifying Linux’s real-time scheduling as a safety function of CTRL OS, we must evidence that a set of SCHED_DEADLINE threads can be successfully scheduled on real hardware repeatedly, over many thousands of hours of soak testing. Codethink provides the userspace program rusty-worker for this purpose. rusty-worker has two threads: a SCHED_DEADLINE “worker” thread, and a SCHED_OTHER “monitor” thread. (SCHED_OTHER is the default scheduling mode for Linux threads, subjecting them to round-robin, not deadline, scheduling.) rusty-worker’s monitor thread initially configures the sched_setattr parameters of the worker according to user configuration. It then periodically reports the worker’s measured scheduling parameters, specifically runtime and period, to the systemd journal for post-test analysis and Codethink’s open-source safety-monitor running on the same system to trigger runtime mitigations34.

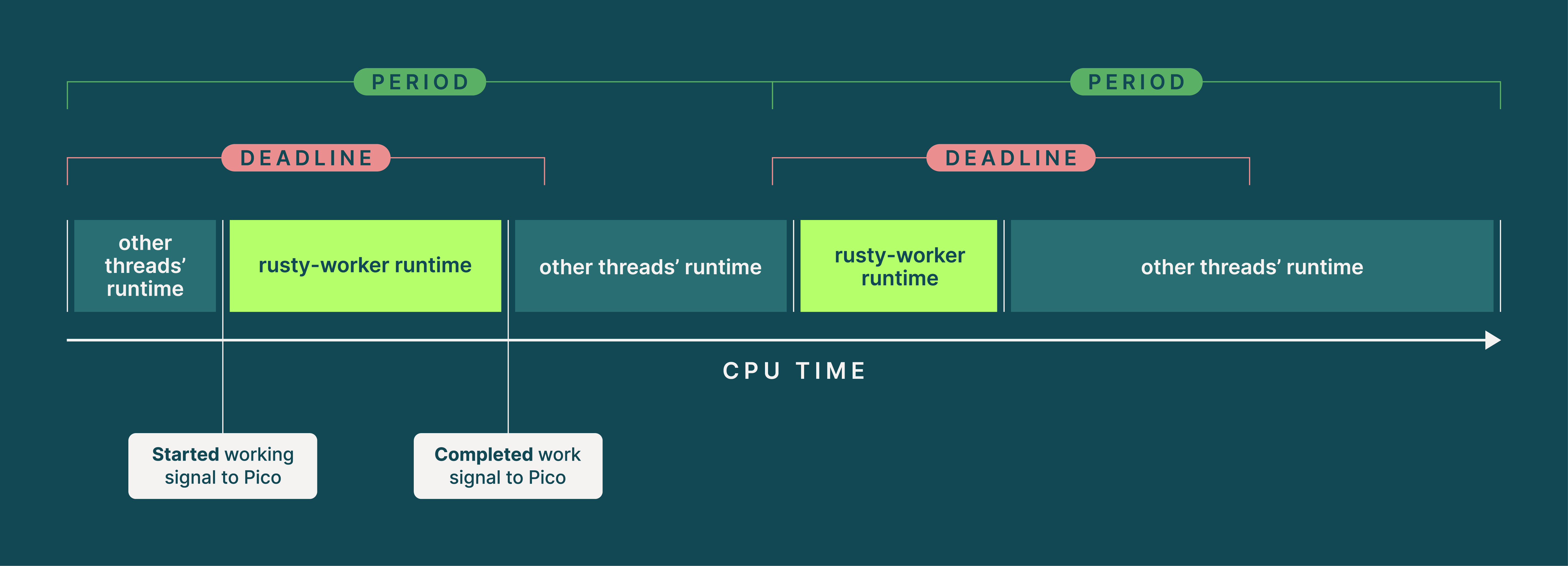

rusty-worker’s

rusty-worker’s SCHED_DEADLINE worker thread shares CPU time with other threads. It declares its period, deadline, and worst-case runtime to the kernel with the sched_setattr syscall, and the kernel ensures that it gets all its runtime before each period’s real-time deadline by balancing with other threads. Note that the rusty-worker’s real runtime and the position of that runtime in the period may differ between periods, however execution should always finish before the deadline expires.

The safety-monitor relies on rusty-worker’s extrinsic reports of scheduling period and runtime to make safety decisions (e.g. should the system be restarted by allowing a watchdog timer to expire?), and CTRL OS’s engineers rely on the post-test reports to investigate regressions and remedy them. If there were a problem with the device-under-test’s clock, a rusty-worker’s true runtime and period might differ from what it reported, without safety-monitor or engineers being any the wiser. To guard against this, we modified rusty-worker to send signals on a UART serial port when it started and completed execution for a period. As the UART protocol is, by definition, asynchronous, a device on the other end of the serial port can time these signals and derive its own external measurement of scheduling parameters without relying on the device-under-test’s clock: the time between a started and completed signal is the runtime, and the time between two started signals is the period.

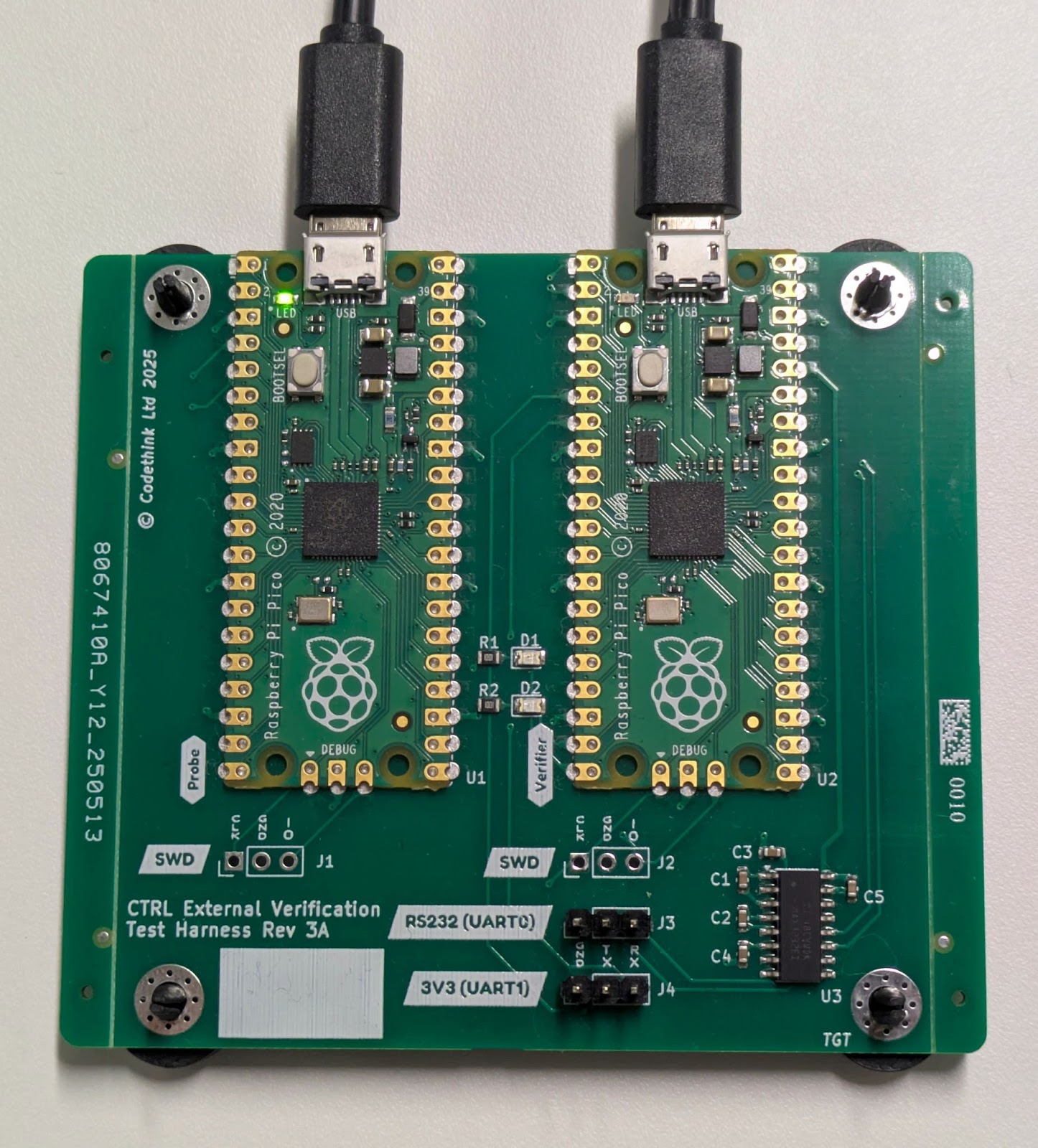

We chose a Raspberry Pi Pico as our external measurement device. It is mounted on a custom printed circuit board with a second Pico, which acts as a probe to flash the first Pico with firmware before each test, and a logic-level shifter so signals from both RS232 and 3.3V level UART devices can be analysed. The Pico is based on the RP2040 ARM Cortex-M0+ microcontroller, and has first-class support for embedded Rust, without the overhead of an operating system. We thus set about writing Rust verification firmware (vfrs) for the Pico, utilising the rp-hal (Hardware Abstraction Layer) crate to build up an interrupt-driven architecture to provide accurate timings of signals on the UART line, and hence report its measurements of rusty-worker’s runtime and period on the device-under-test over USB to a CI runner5. The CI runner aggregates the external measurements with rusty-worker’s reports to the systemd journal, performing pairwise matching of measurement sessions, before uploading to an OpenSearch data lake for statistical analysis.

The custom PCB used for external scheduling measurement in CTRL OS’s test rigs. The left-hand Pico U1 serves as a Debug Probe, flashing the right-hand Pico U2 with a controlled build of vfrs in each test6. The right-hand Pico connects to the CTRL OS device-under-test via an RS232 UART (J3) or a 3.3V UART (J4), and the test runner via USB.

We chose Rust for vfrs despite the well-documented and extensive C/C++ SDK provided by the Raspberry Pi Pico’s manufacturers7. Our reasoning for this was threefold: firstly, HALs take advantage of Rust’s type and reference systems to ensure illegal hardware configurations and memory-unsafe operations are rejected at compile-time, not runtime, giving us more certainty about the Pico’s stability and accuracy over hours of soak testing. The only code exempted from the Rust compiler’s memory safety checks must be presented in explicitly marked unsafe code blocks, alongside human-written safety argumentation and justification.

Secondly, as the Rust code needed to bootstrap interrupts, USB and UARTs on a Pico is more verbose than the equivalent C, less information is hidden from engineers and safety assessors about how we use the RP2040’s registers and peripherals, giving more certainty around its exact behaviour and timing accuracy. For example, as we needed to calculate the Pico’s clock frequency (125 MHz) in code, we realised that our methodology would be inappropriate for the fastest rusty-worker processes with runtimes of hundreds of nanoseconds; this equates to just hundreds of Pico reference clock ticks.

Lastly, we chose Rust as its embedded toolchain can be used in a controlled build system just as easily as its desktop toolchain. CTRL OS is developed in accordance with the Trustable Software Framework (TSF), which asserts that “All tests for CTRL OS, and its build and test environments, are constructed from controlled/mirrored sources and are reproducible”8. We achieved this by defining vfrs as an BuildStream element integrated into the CI image for CTRL OS’s tests9. This element specifies vfrs’s source code, dependency crates, build commands and freedesktop-sdk’s Rust toolchain, with minimal patching to add the thumbv6m-none-eabi target and specify an LLVM rather than GCC backend. Since we build vfrs like any other testing component, we can make the same guarantees about its provenance (asserting the origins of source code, dependencies, build environment, and hence the final binary) and bit-for-bit reproducibility. We achieve this without the ARM toolchain wrangling associated with the C equivalent, such as handling vendor-specific compiler versions, nested Git submodules, and build scripts which can download arbitrary binaries from the Internet at compile-time.

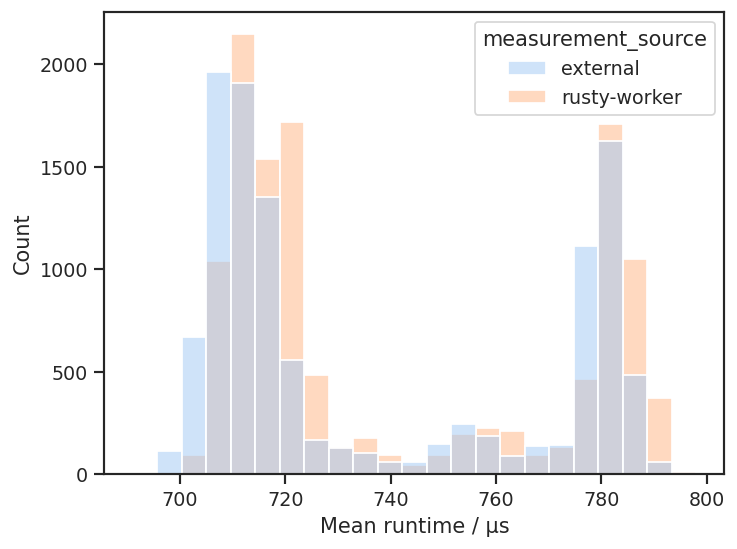

We perform weekly analysis of the external and rusty-worker scheduling measurements stored in OpenSearch, both in version-controlled Jupyter notebooks and automatic validators to provide scores for CTRL OS’s Trustable compliance report10. With CTRL OS and rusty-workers running on an ARM64 Rockchip RK3588-based test system, we found exceptional correlation between rusty-worker and external measurements of runtime and period, with matching distributions and summary statistics to within 10 µs. These external measurement soak tests gave us great confidence in the many millions of samples of scheduling metrics collected in other soak tests on the same hardware. Furthermore, as external measurement requires that a rusty-worker’s worker thread interacts with a TTY serial device, we predicted and observed improved scheduling performance for the entire system when CTRL OS ran with the PREEMPT_RT kernel configuration option enabled11. This was because, with PREEMPT_RT, the SCHED_DEADLINE worker thread may be interrupted (or pre-empted) while it works through the notoriously non-deterministic spin locks of the kernel’s TTY layer when sending signals to the Pico.

A histogram showing the mean elapsed runtime of a rusty-worker as measured by the rusty-worker itself and externally by vfrs on a Pico. Elapsed runtime plotted here is the time between the “started working” and “work completed” signals from rusty-worker, rather than the runtime specified in sched_setattr. These signals may have been sent in different blocks of runtime in the same period if the thread was pre-empted, so we see two peaks in the distribution: one at 710 µs without pre-emption, and one at 780 µs with pre-emption. Importantly though, the orange rusty-worker distribution maps well to the blue vfrs distribution.

In summary, external measurement, powered by embedded Rust firmware, allowed us to robustly evidence the real-time scheduling capabilities of a Linux environment, while maintaining tight control of test environment provenance and stability.

If you are interested in Codethink’s CTRL OS, Trustable Software Framework, or build engineering and embedded software expertise, then please get in touch at connect@codethink.co.uk.

-

https://www.kernel.org/doc/html/latest/scheduler/sched-deadline.html ↩

-

https://www.man7.org/linux/man-pages/man2/sched_setattr.2.html ↩

-

https://www.man7.org/linux/man-pages/man8/systemd-journald.service.8.html ↩

-

https://gitlab.com/CodethinkLabs/safety-monitor/safety-monitor ↩

-

https://github.com/rp-rs/rp-hal ↩

-

https://github.com/raspberrypi/debugprobe ↩

-

https://www.raspberrypi.com/documentation/microcontrollers/c_sdk.html ↩

-

https://codethinklabs.gitlab.io/trustable/trustable/doorstop/TA.html#ta-tests ↩

-

https://buildstream.build/ ↩

-

https://codethinklabs.gitlab.io/trustable/trustable/trudag/validators.html ↩

-

https://dl.acm.org/doi/10.1145/3297714 ↩

Other Content

- FOSDEM 2026

- Building on STPA: How TSF and RAFIA can uncover misbehaviours in complex software integration

- Adding big‑endian support to CVA6 RISC‑V FPGA processor

- Bringing up a new distro for the CVA6 RISC‑V FPGA processor

- Engineering Trust: Formulating Continuous Compliance for Open Source

- Why Renting Software Is a Dangerous Game

- Linux vs. QNX in Safety-Critical Systems: A Pragmatic View

- Is Rust ready for safety related applications?

- The open projects rethinking safety culture

- RISC-V Summit Europe 2025: What to Expect from Codethink

- Cyber Resilience Act (CRA): What You Need to Know

- Podcast: Embedded Insiders with John Ellis

- To boldly big-endian where no one has big-endianded before

- How Continuous Testing Helps OEMs Navigate UNECE R155/156

- Codethink’s Insights and Highlights from FOSDEM 2025

- CES 2025 Roundup: Codethink's Highlights from Las Vegas

- FOSDEM 2025: What to Expect from Codethink

- Codethink/Arm White Paper: Arm STLs at Runtime on Linux

- Speed Up Embedded Software Testing with QEMU

- Open Source Summit Europe (OSSEU) 2024

- Watch: Real-time Scheduling Fault Simulation

- Improving systemd’s integration testing infrastructure (part 2)

- Meet the Team: Laurence Urhegyi

- A new way to develop on Linux - Part II

- Shaping the future of GNOME: GUADEC 2024

- Developing a cryptographically secure bootloader for RISC-V in Rust

- Meet the Team: Philip Martin

- Improving systemd’s integration testing infrastructure (part 1)

- A new way to develop on Linux

- RISC-V Summit Europe 2024

- Safety Frontier: A Retrospective on ELISA

- Codethink sponsors Outreachy

- The Linux kernel is a CNA - so what?

- GNOME OS + systemd-sysupdate

- Codethink has achieved ISO 9001:2015 accreditation

- Outreachy internship: Improving end-to-end testing for GNOME

- Lessons learnt from building a distributed system in Rust

- FOSDEM 2024

- QAnvas and QAD: Streamlining UI Testing for Embedded Systems

- Outreachy: Supporting the open source community through mentorship programmes

- Using Git LFS and fast-import together

- Testing in a Box: Streamlining Embedded Systems Testing

- SDV Europe: What Codethink has planned

- How do Hardware Security Modules impact the automotive sector? The final blog in a three part discussion

- How do Hardware Security Modules impact the automotive sector? Part two of a three part discussion

- How do Hardware Security Modules impact the automotive sector? Part one of a three part discussion

- Automated Kernel Testing on RISC-V Hardware

- Automated end-to-end testing for Android Automotive on Hardware

- GUADEC 2023

- Embedded Open Source Summit 2023

- RISC-V: Exploring a Bug in Stack Unwinding

- Adding RISC-V Vector Cryptography Extension support to QEMU

- Introducing Our New Open-Source Tool: Quality Assurance Daemon

- Achieving Long-Term Maintainability with Open Source

- Full archive